Main Article Content

Abstract

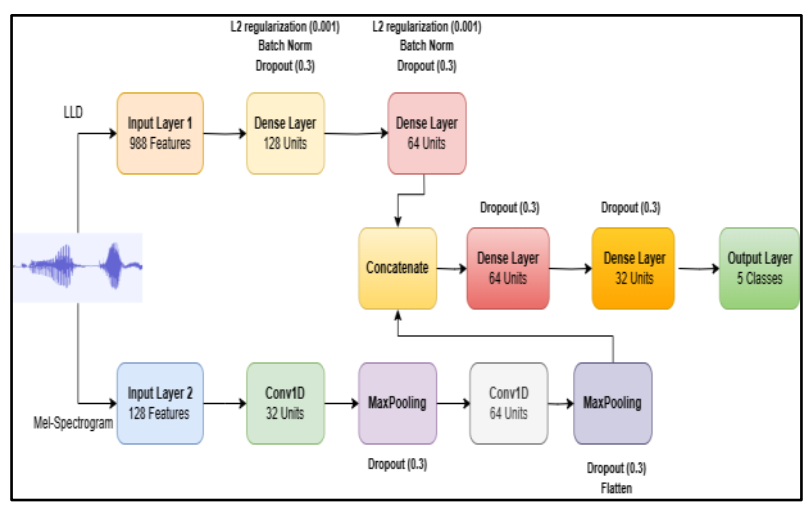

Arabic Speech Emotion Recognition (ASER) presents unique challenges due to linguistic diversity, phonetic complexity, and the limited availability of labeled datasets. This work presents a hybrid deep learning model that integrates a Multi-Layer Perceptron (MLP) and a Convolutional Neural Network (CNN) to effectively classify emotions from Arabic speech data, using the KEDAS dataset. The model is designed to process two complementary sets of features: acoustic features, such as low-level descriptors, as well as Mel-spectrogram features that capture time-frequency information. Experimental results demonstrate that the hybrid architecture effectively leverages the strengths of both feature types, achieving a high accuracy of 98% in emotion recognition.

Keywords

Article Details

Copyright (c) 2025 Dalal Djeridi, Bachir Said, Mourad Belhadj (Author)

This work is licensed under a Creative Commons Attribution 4.0 International License.

How to Cite

References

- R. Yahia Cherif, A. Moussaoui, N. Frahta, and M. Berrimi, “Effective speech emotion recognition using deep learning approaches for Algerian dialect,” in Proc. Int. Conf. Women in Data Science at Taif University (WiDSTaif), Taif, Saudi Arabia, Mar. 2021. doi: 10.1109/WIDSTAIF52235.2021.9430224.

- O. A. Mohammad and M. Elhadef, “Arabic speech emotion recognition method based on LPC and PPSD,” in Proc. 2nd Int. Conf. Computation, Automation and Knowledge Management (ICCAKM), Dubai, UAE, Jan. 2021, pp. 31–36. doi:10.1109/ICCAKM50778.2021.9357769.

- S. Ouali and S. El Garouani, “Arabic speech emotion recognition using convolutional neural networks,” J. Electr. Syst., vol. 20, no. 7, pp. 2649–2657, 2024.

- A. Kaloub and E. A. Elgabar, “Speech-based techniques for emotion detection in natural Arabic audio files,” Int. Arab J. Inf. Technol., vol. 22, no. 1, pp. 139–157, Jan. 2025. doi: 10.34028/iajit/22/1/11.

- M. Belhadj, I. Bendellali, and E. Lakhdari, “KEDAS: A validated Arabic speech emotion dataset,” in Proc. Int. Symp. Innovative Informatics of Biskra (ISNIB), Biskra, Algeria, 2022. doi: 10.1109/ISNIB57382.2022.10075694.

- B. Mourad, E. Lakhdari, and I. Bendellali, “Kasdi-Merbah (University) emotional database in Arabic speech,” Dec. 2023. doi: 10.35111/qqer-qz15.

- “openSMILE,” Accessed: Apr. 4, 2025. [Online]. Available: https://audeering.github.io/opensmile/get-started.html.

- M. E. Elalami, S. M. K. Tobar, S. M. Khater, and E. A. Esmaeil, “Texture feature and Mel-spectrogram analysis for music sound classification,” Int. J. Adv. Comput. Sci. Appl., 2024. [Online]. Available: https://www.ijacsa.thesai.org.

References

R. Yahia Cherif, A. Moussaoui, N. Frahta, and M. Berrimi, “Effective speech emotion recognition using deep learning approaches for Algerian dialect,” in Proc. Int. Conf. Women in Data Science at Taif University (WiDSTaif), Taif, Saudi Arabia, Mar. 2021. doi: 10.1109/WIDSTAIF52235.2021.9430224.

O. A. Mohammad and M. Elhadef, “Arabic speech emotion recognition method based on LPC and PPSD,” in Proc. 2nd Int. Conf. Computation, Automation and Knowledge Management (ICCAKM), Dubai, UAE, Jan. 2021, pp. 31–36. doi:10.1109/ICCAKM50778.2021.9357769.

S. Ouali and S. El Garouani, “Arabic speech emotion recognition using convolutional neural networks,” J. Electr. Syst., vol. 20, no. 7, pp. 2649–2657, 2024.

A. Kaloub and E. A. Elgabar, “Speech-based techniques for emotion detection in natural Arabic audio files,” Int. Arab J. Inf. Technol., vol. 22, no. 1, pp. 139–157, Jan. 2025. doi: 10.34028/iajit/22/1/11.

M. Belhadj, I. Bendellali, and E. Lakhdari, “KEDAS: A validated Arabic speech emotion dataset,” in Proc. Int. Symp. Innovative Informatics of Biskra (ISNIB), Biskra, Algeria, 2022. doi: 10.1109/ISNIB57382.2022.10075694.

B. Mourad, E. Lakhdari, and I. Bendellali, “Kasdi-Merbah (University) emotional database in Arabic speech,” Dec. 2023. doi: 10.35111/qqer-qz15.

“openSMILE,” Accessed: Apr. 4, 2025. [Online]. Available: https://audeering.github.io/opensmile/get-started.html.

M. E. Elalami, S. M. K. Tobar, S. M. Khater, and E. A. Esmaeil, “Texture feature and Mel-spectrogram analysis for music sound classification,” Int. J. Adv. Comput. Sci. Appl., 2024. [Online]. Available: https://www.ijacsa.thesai.org.